VMware NSX - Multi-site design options - recommendations - scenarios

DISCLAIMER - THIS IS REMINDER BASED POST - ALL INFORMATION AVAILABLE AT OFFICIAL MULTI LOCATION DESIGN GUIDE HERE

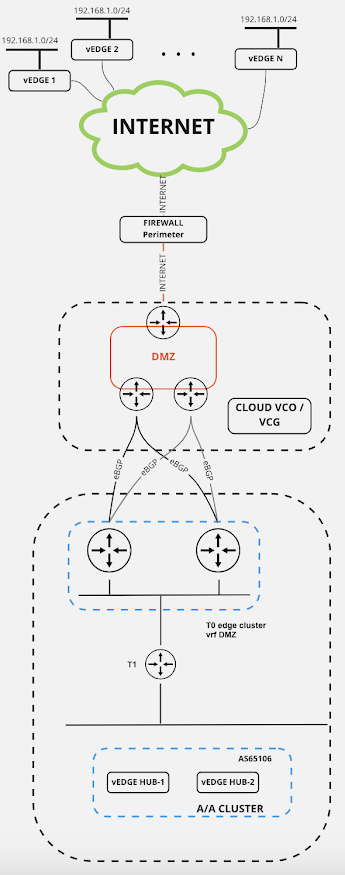

One of the fundamental scenarios which can be fulfilled using datacenter SDN solution like VMware NSX is capability to implement multisite setup, giving option to Customer to utilise multiple DC locations over any L1/L2/L3 inter site link with larger MTU support because of overlay encapsulation inside NSX. Worth mentioning is fact that security, incorporated inside NSX, is also capable to follow multi location logic needed for these type of implementations. There are two main concepts behind multisite setup offered by NSX:

- NSX Multisite - single NSX manager cluster managing transport nodes in different locations, and

- NSX Federation - introduced from NSX v3.x, with 1 central global management cluster (global manager) and 1 NSX manager cluster per location (local manager).

In this article I will follow up on design decisions and recommendations for first option, as I see it more popular on most (my 😊) Customers these days.

Regarding management cluster (ie control plane):

- Deployment for multisite scenario for use case with 2 or 3 locations AND latency (RTT - Round Trip Time) <10ms without congestion --> recommended to split 3 NSX managers in two or even three locations if possible. It's worth mentioning that, in case of loosing location with 2 of 3 NSX manager on it, management plane service will be stopped, because minimum 2 valid members in cluster are mandatory. Same recommendation complies in case 3 locations are present - one NSX manager per location is possible, with same requirements like mentioned one. For different location setup with NSX manager cluster there is no requirement for VLAN stretch across locations, except in option where cluster VIP is being used - external load balancer VIP must be used;

- Latency RTT>10ms means that all 3 NSX managers should be in one single location and two options are possible on management cluster network perspective:

- stretched VLAN for management between locations available - cluster VIP could be used

- stretched VLAN management not available - VIP can be used, with external load balancer

- Latency <10ms across locations AND T0 in A/S mode --> recommendation to create stretched edge clusters with nodes in 2 locations AND usage of failure domains;

- Latency >10ms across location OR T0 in A/A mode needed --> non-stretched edge clusters without failure domains

- Specific use case - latency <10ms across locations - cross-location traffic increase present AND asymmetric routing are not concerns --> T0 in A/A mode with stretched cluster

- Metropolitan region distance (aka <10ms inter site link RTT) - management plane in 1 location - WITH L2 VLAN management stretched --> vSphere HA or SRM

- Two large distance locations (<10ms inter site link RTT) - management plane in 2 locations - WITH L2 VLAN management stretched --> SRM

- Large distance region (>10ms inter site link RTT) - WITH L2 VLAN management stretched --> SRM

- Large distance region (>10ms inter site link RTT) - WITHOUT L2 VLAN management stretched --> integrated backup/restore + NSX managers with FQDN / IP with short TTL

- RTT<10ms between locations AND N/S traffic below performance of 1 edge node --> stretched cluster with failure domains

- RTT>10ms between locations OR N/S traffic above performance of 1 edge node --> non-stretched edge cluster without failure domain. Possible need for T1 re-attaching to T0's on second location through NSX UI or scripted via API call!

Maximum 150ms latency (RTT) between locations

- In case of possible congestion cross location - recommended to configure QoS to prioritise NSX management plane traffic: NSX-T managers to transport nodes (edge nodes and hypervisors)

- For management plane between NSX-T Managers and transport nodes

- For data plane between transport nodes

- Public IP@ (segments, NAT, load balancer VIP) must be advertisable from both locations

- Maximum 10ms latency (RTT) between locations

- L2-VLAN management stretch across the different locations

- vCenter Cluster stretched between locations with vSphere-HA

- DNS name resolution for NSX-T managers

Networking

- All networking features are supported, but NO local-egress support

- Each T0 has all its N/S traffic via one single location - different T0 can have their N/S traffic via different locations though

- No T0 Active/Active (ECMP) with automatic DR - automatic DR supports only T0 Active/Standby with exception of T0 Active/Active only for metropolitan data centres (<10ms latency) and where asymmetric traffic is possible (no physical firewall cross data centres)

- Malware Prevention (NAPP does not support multi-location)

- Network Detection and Response (NAPP does not support multi-location)

- Network Introspection Cluster-based support

- Network Introspection Host-based support

- Endpoint Protection support

- Virtual Machines on ESXi

- Physical Servers NSX prepared

- Containers

Comments

Post a Comment