NSX ALB (Avi) BGP scaling using ECMP and RHI

NSX ALB (ex Avi Networks) solution supports different methods for scaling inside data plane, providing required resources in throughput, high availability and scalability vertically and horizontally. In typical scale-out mechanism for virtual service, which btw can be manual or automatic setup, service engines (SE) are added to group and existing VIP (Virtual Service IP) is maintained (scale-in process is of course opposite one). Basically different options are available for these scaling methods, known as:

- L2 scale-out mode - VIP always mapped to "primary" SE, which implies that "organization" of traffic and sending to others SE's in group is also responsibility of primary SE --> heavier operations and dependency on this node are possible. Return traffic can go directly from the secondary SE's via the Direct Secondary Return mode or via the primary SE (Tunnel mode). Direct Secondary Return (DSR) mode gives option to return traffic to use VIP as the source IP and the secondary SE’s MAC as the source MAC. The well-known "ARP Inspection" must be disabled in the network, i.e. the network layer should not inspect/block/learn the MAC of the VIP from these packets (possible in some specific OpenStack, Cisco ACI setups etc.), and

- L3 scale-out using BGP - usage of BGP specific features like Route Health Injection (RHI) and Equal Cost multi-path (ECMP) for providing dynamic VS load balancing and scaling. DSR is not possible here because of fact that ARP process is done for the next-hop, which is the upstream BGP router neighbour, which in turn does the ECMP to individual SEs. The return traffic uses respective SE’s MAC as source MAC and VIP as source IP.

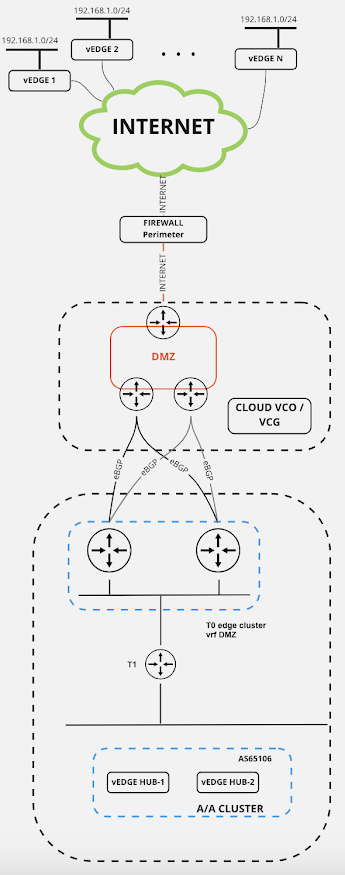

Because of fact that L2 scaling is pretty much easy to test and implement, I would like to show you BGP setup for this purpose, using RHI and ECMP behind the scene. This type of setup is currently supported in following ecosystems - VMware, Linux server (bare metal) and OpenShift/Kubernetes - where last cloud type is not available above Avi v18. Main setup for lab-ing this up can be seen on image below:

- two BGP routers for neighborship (RIGHT and LEFT),

- NSX ALB setup with controllers and service engines in data plane - vSphere cloud integration created for test,

- Data network - for SE-BGP neighbour establishment and rest of data traffic - 192.168.102.0/24,

- VIP network - virtual services L3 information - 192.168.103.0/24,

- backend web server inside NSX-T segment, on own L3 segment.

Basic configuration involves fact that BGP session is established between SE's in vSphere cloud, with VIP route exchange as /32 host route out (RHI part) of NSX ALB domain. ECMP is included inside BGP support, with different vendor specific configurations in terms of maximum paths.

BGP networking configuration can be done through GUI or CLI, and inside GUI it's available under Infrastructure -> Cloud resources -> Routing -> BGP peering, with per configured cloud option:

Picture 2. BGP peering overview per configured cloud (Avi is using Quagga based daemon for BGP configuration and setup)

Picture 3. Routing options information inside BGP profile

Picture 4. BGP peers configuration inside profile

Interesting option, beside others which are pretty much very clear, is Label field - which is available under routing and BGP peer configuration. This config gives an option for per-neighbour route announcement inside configuration (routes with same label values per BGP peer are appropriately announced).

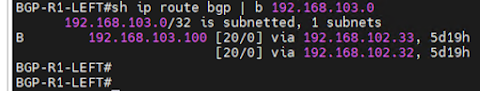

Now, if other BGP side is checked, in this example routers RIGHT and LEFT based on Cisco IOS system, results are confirmed and routes are showing inside routing table as host routes:

Picture 5. NSX ALB service engines acting as BGP neighbours

Picture 6. Virtual service IP shown as host route inside routing table

In summary, BGP setup is having couple of limitations and you should not expect full BGP configuration possibilities, but from perspective of reachability to adequate virtual services everything is configurable and possible. L3 scale out is fully supported and for large scale services could be planned as the best option inside Customer infrastructure.

At the end, I would like to mention very useful links with deeper explanations on above subject directly from Avi Networks portal:

Comments

Post a Comment