NSX-T T0 BGP routing considerations regarding MTU size

Recently I had serious NSX-T production issue with BGP involved and T0 routing instance on edge VMs cluster, in terms of not having routes inside routing table on T0 - which supposed to be received from ToR L3 device.

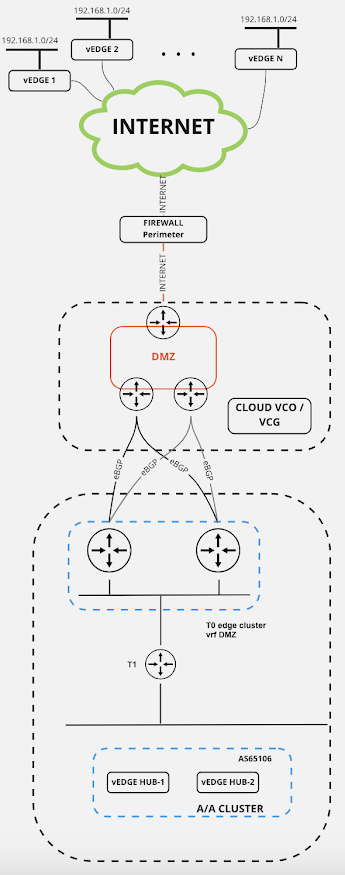

NSX-T environment has several options regarding connections from fabric to the outside world. These type of connections goes over T0 instance, which can be configured to use static or dynamic routing (BGP, OSPF) for this purpose. MTU is important consideration inside NSX environment, because of GENEVE encapsulation inside overlay (should be minimum 1600bytes - 9k ideally). Routing protocols are also subject to MTU check (OSPF is out of scope for this article, but you know that MTU is checked during neighborship establishment).

Different networking vendors are using various options for MTU path discovery - by default this mechanisms inside BGP should be enabled (but of course should be checked and confirmed). Problem arrives when you configure ToR as ie 9k MTU capable device, but BGP is having problems to exchange routes because of some facts - lets observe scenario when there is MTU mismatch on Edge inside NSX-T and T0 instance (ie 1600bytes by default):

- BGP session is established and UP

- routes are showing correctly inside ToR BGP/routing table

- THERE ARE NO ROUTES inside T0 routing table (probably they will show up if its small number of them - ie only DEFAULT etc)

- BGP session is going UP/DOWN after 3min (default BGP HOLD timer = 180s)

Different troubleshooting techniques could be used inside NSX to confirm this:

get logical-router - followed with correct vrf# T0 SR choice

get bgp neighbors summary

get route bgp - if we expect something here and see 0 - problem is present

capture packets (expression "port 179" could be useful to catch only on BGP stuff)

What's happening behind the scene:

- BGP session is UP because initial packet exchange is not going to be over edge MTU size, which is smaller

- ToR is having routes because it is configured for larger MTU

- T0 is NOT HAVING ROUTES because BGP update packet is going to be larger then configured (except in case only 1 route or smaller number, as I mentioned)

Summary - MTU should be checked and confirmed:

- using vmkping command inside overlay, between TEPs - transport and edge nodes

- ToR-T0 BGP session over Edge uplink could be with large MTU - but configured same on both sides. LARGE MTU IS NOT A REQUIREMENT on this BGP uplink, because traffic here is not a GENEVE traffic and, most probably, you will not change MTU to 9k through your whole networking infrastructure (in most cases)

- if ToR BGP neighbor is configured with system MTU of 9k - configure physical interface, or SVI if using VLAN based one, down to NSX T0 with same MTU as inside NSX

- changing MTU inside NSX is of course also an option here too

Comments

Post a Comment